Server-Timing

Table of Contents

This is a cross-post from @stoyanstefanov’s 2018 Performance Calendar, the original can be found here.

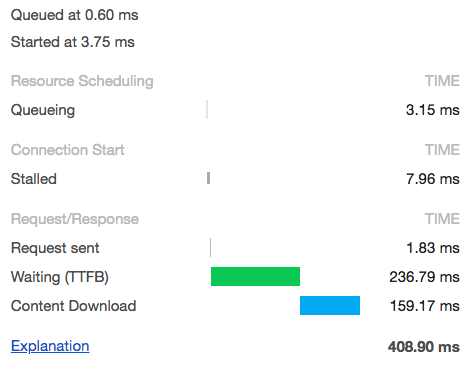

Consider the following timing data for a stylesheet request:

If you were staring at the browser who had to wait around for a response for those 236.79 milliseconds, you’d be hard pressed to find out what was going on. Maybe that time reflects RTT (roundtrip time) and my server responded instantly. Or maybe my server had to do a bunch of custom work to hand me back the bytes of my stylesheet. If I want my TTFB to be shorter, where do I focus my efforts?

Being able to collect and expose this timing information is important because, although the Navigation- and Resource-Timing APIs do a fantastic job measuring the work that a browser has to do when making requests, those APIs don’t help at all when it comes to the work that the server has to do to fulfill those requests. Even invaluable tools like Charles and Fiddler can’t provide any insight about exactly what happens after the request leaves the client and heads out into the tubes of the internet.

The dirty little secret of the above diagram (that we all know and love) is that it is not nearly drawn to scale. The slowest of the above buckets are typically the “Request” and “Response”. But further, it usually takes WAY longer to formulate the response than to ship those bytes back to the client. How much longer? Well, we looked at a few billion beacons for a subset of our retail customers over a multiple week period. The data showed that half of their resource requests were stuck in the “Request” bucket SIX TIMES (or more) longer than in the “Response” bucket.

This means that we have ZERO visbility into the slowest and potentially most problematic bucket of our diagram. And that’s exactly where Server-Timing steps in - it allows us blow up the blackbox between requestStart and responseStart, so that we can uncover the cause of slow TTFBs.

Introducing Server-Timing

Server-Timing, the newest of the *-Timing web performance APIs, provides a mechanism for developers to supplement the navigation and resource performance entries with timing data collected on the server. The API is available as of Chrome 65, Opera 52, Firefox 61 (https only), and Safari 12 (behind an experimental flag).

If, for example, a developer wants to report the duration of a particular database call that is made while generating an HTML response, by appending a Server-Timing response-header like this:

|

|

that timing data will be available on the navigation entry like this:

|

|

The only required field for a Server-Timing entry is a name - both duration and description are optional. Visualization tools (like browser devtools and RUM products) may try to interpret your data - charting your duration in milliseconds and preferring your description over name as a more human-readable label - but the truth is that duration is any number and description can be any string.

Excepting cookies, Server-Timing is the first web-exposed (that is, available to Javascript) response header. Gone are the days where we need to inject server-side timing information into the markup of a page - as a <SCRIPT> node that initializes JSON, or something more custom like HTML comments or hidden <TEXTAREA>s. With the new serverTiming attribute on PerformanceNavigationTiming and PerformanceResourceTiming entries, we can read those timers and beacon them back for collection/aggregation the same way we already do with our other RUM data!

Instrumentation

You can’t optimize what you can’t measure.

- Everyone, all of the time

You can think of Server-Timing a bit like User-Timing on the server - that is, you don’t get anything for free. You, the developer, need to instrument your backend code to benefit from the API.

Let’s take the simple example (using Node.js/Express) of serving an avatar image, where we verify that the requesting user has access to the image, and then we check our cache to decide if we need to make a database call.

|

|

In this example, checkACL() and loadFromDatabase() are potentially costly synchronous operations. Let’s instrument those methods to leverage the Server-Timing API.

|

|

Here you can see that we have wrapped the checkACL() and loadFromDatabase() function calls into new anonymous function expressions. We pass each function expression into a helper method named wrapServerTiming(), which times the execution and writes out the Server-Timing header to our response.

(It is important to note here that we append our new Server-Timing headers instead of blanketly setting them. We need to preserve existing Server-Timing headers that may have already been set on the response, perhaps by other middleware or the origin.)

Collection

Back in Javascript-land, that timing information can be accessed with a PerformanceObserver:

|

|

Or with the legacy and potentially racy getEntries*() APIs:

|

|

(Note that both of those snippets should produce the same output, though likely in different order.)

There are already many libraries that you can leverage today that makes instrumenting your code for Server-Timing a lot less manual. If you search on npm or github, chances are you will find a library for your stack. We’ve seen libraries for Node.js, Python, Ruby on Rails, C++11, ASP.NET Core, Elixir, Django, and Magento.

Compression

Server-Timing data can get pretty verbose. Until we have native compression in the browser, you will want to consider apply custom compression to the entries you’ve collected. More detail on how we do it can be found here.

Debugging

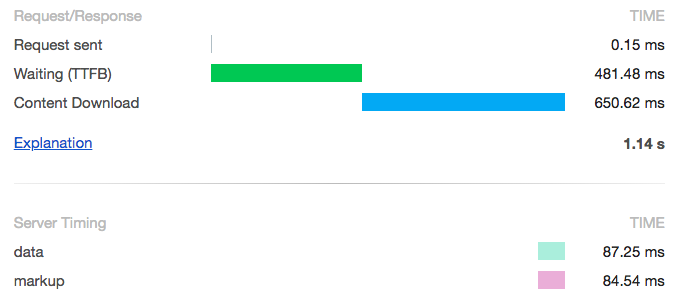

Because the Server-Timing API uses response headers on the wire, you will be able to debug your entries just like any other header, by using a proxy or the network tab of your favorite browser. Developer Tools in Chrome and Web Inspector in Safari both attempt to visualize your timers under a new “Server Timing” section under the “Timing” tab for a particular network request.

As you can see, each Server-Timing entry for a resource gets a row, and the duration value is charted using the same timing scale as the above section. Because Server-Timing only provides a duration (and not a startTime) the horizontal bars are pushed all the way to the right.

Caching

If a resource is cached in the browser, then its headers (including Server-Timing) will be cached as well. Developers need to be aware of this to decide which data should be reported.

For example, cached Server-Timing entries that report on actual back-end timers should probably be ignored, lest they skew the overall results. But, if Server-Timing is being used to communication metadata about the resource (image dimensions, which edge server, etc), then cached Server-Timing entries are still true and meaningful.

Using Resource-Timing, it’s fairly safe identify resource requests that never actually left the browser like this.

How We Use Server-Timing

We have been playing with Server-Timing at our little CDN for over a year, here’s what we’ve been cooking up:

We now emit the following Server-Timing entries for every resource that passes through our network (for mPulse-enabled properties):

"cdn-cache"- this enum indicates the cache status of a resource request- “HIT” - the request did not go to the origin

- “REVALIDATE” - the request went to the origin because our copy of the resource was stale

- “MISS” - everything else

"origin"- if the request went to the origin, this is the RTT (round-trip time), including origin think time, as measured by our edge server that communicates directly with the origin"edge"- the duration between the moment we get the request and when we begin to respond, less any"origin"time, as measured by the edge server that responds to the browser

Collecting and aggregating the cdn-cache status of all the resources we serve has allowed us to bubble up a new metric in our mPulse dashboards called “CDN Cache Hit Ratio”, which we also have broken down by content type.

Our new CDN timers help us “carve up” the “blame pie” of the request/response exchange. When we have the resource cached at the edge, you are going to see very very low edge times, which is good. But if the edge has to wait for data from the origin (uncacheable API calls), that’s when you’ll need to start paying very close attention to the origin times for your site.

With transparency about the services we provide in mind, we think that all CDNs should emit these Server-Timing entries. Doing so would allow our users to quantify in a standard way how well we are doing at offloading their traffic.

Other Use Cases

Remember that the duration and description fields are really just a number and a string respectively. Let’s take a look at some other use cases we’ve found for Server-Timing.

Cache Source

Whether a CDN sits in front of your origin or you have something homegrown, there’s a good chance that you are utilizing some sort of tiered caching strategy. Server-Timing is the perfect mechanism to communicate exactly which level of cache ultimately served the response. Here, we will treat description as an enum and omit the duration field:

|

|

Where [cache source] can be one of:

- “warm”

- “cold”

- “origin” 😦

Collecting and reporting on the cache source of our resources will allow us to monitor our caching strategy, making sure our assets are cached as close to the client as possible. Ideally, your RUM tool allows you to setup alerts based on custom data. That way you can be notified should your caching regress.

Image Adornment (a11y)

Let’s look at an example where a site provides some personalization by showing the users avatar somewhere in the header of the site.

|

|

To draw that simple image, the browser needs (at least) three pieces of data:

- the bytes of the png file

- the alt value

- the title value

As all of those are dynamic, being based on the current user. Wouldn’t it be cool if we could retrieve all three pieces of data with just one network request? With Server-Timing and PerformanceObserver, you can!

While serving the image from your backend, you can emit the dynamic accessibility attributes as Server-Timing headers like this:

|

|

Back in Javascript land, you can setup a PerformanceObserver to listen for supported attributes and update the appropriate attributes on the correct <IMG> node.

You can see the code for a prototype of this pattern here.

Distributed Tracing

As mentioned in @yoav’s WebPerfWG meeting summary post, the good folks of the Distributed Tracing Working Group are exploring using Server-Timing headers to help them stitch together server-side traces, potentially using reserved traceparent and tracestate entry names.

Whether standard or not, web-exposing backend logs via Server-Timing can help developers debug network or backend issues directly from the UI. Developers can ship down transaction IDs, server context, or even full URLs to other backend monitoring systems - perhaps only when a cookie or other request header is present.

|

|

Pre-correlating diagnostic URLs allows developers to drill into those backend traces, directly from the console or devtools of the browser, with just a click!

Value Confirmation

Let’s say you are already implementing some sort of on-demand resource compression or optimization. Server-Timing is a great mechanism to communicate the number of bytes you saved your users (and their data plans!). For same-origin resources, Resource-Timing reports encodedBodySize, which is the number of bytes of the body of the resource, before decoding. If your server were to emit the original size of the resource as it sits on disk (before any compression or optimization) like this:

|

|

it would very easy for you to aggregate the total bytes saved for a given page load, like this.

A/B Testing

Let’s say you are A/B testing your all-important hero image. If you hold the URL for each experimental hero image constant, and make the bucketing decision during the image actual request, then you can use Server-Timing to communicate which experimental bucket the user fell into.

|

|

Or consider the multi-CDN use case, which more and more big name sites are playing with. For same-origin resources, Resource Timing data can help us uncover so many potential bottlenecks: connection contention, slow DNS resolution, non-ideal caching, missing compression, etc. But in a multi-CDN world, if you don’t know which CDN served the resource, you don’t even know where to start your tuning! Image the analytic possibilities that exist if all CDNs were to self-report with one simple response header, like:

|

|

Wrapping it up

Server-Timing is a fairly lightweight way of web-exposing data collected from the server. Developers can use it to bubble up timing or any other information about network requests to the browser, which makes it easy to correlate with other RUM data. If your organization is making use of Server-Timing in a unique way, I’d love to hear about it - hit me up on the twitters and tell me all about it!

Many thanks to Nic Jansma, Colin Bendell, and Yoav Weiss for the editing, motivation, and overall awesomeness.

Happy New Year, everyone! 🎆 🍾